Music

Music and fractal

Both music and fractals have multi-level structures. This makes me think of two possible ways converting fractals into sound or music: Given a fractal on a 2d plane, we can view each vertical slice of pixels as a waveform or spectrum and scan over the fractal horizontally to produce a playable audio. It may require the fractal to be a real-valued map (instead of binary). The waveform option feels more reasonable because it more likely to produce non-uniform characteristics on the sound, such as varying intensities across frequencies. However, it will be tricky to connect each waveform across time.

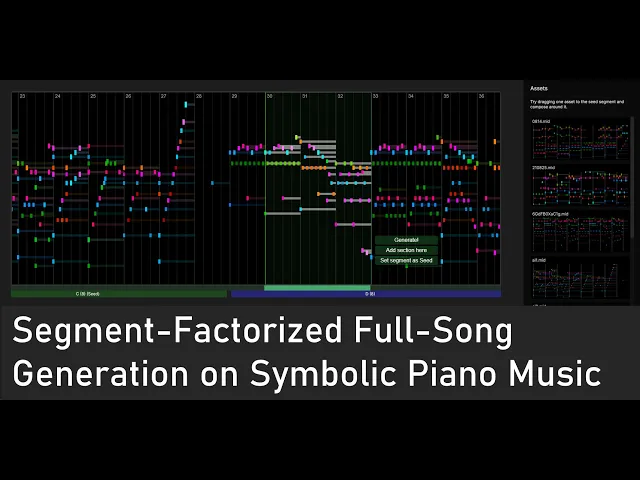

Segment Factorized Full Song Generation on Symbolic Piano Music

My first music generation paper.🎉We propose a full-song generation model with selective attention to related segments. It can smoothly collaborate with human composing music.

My first music generation app

After 3 years of training music generation models and interacting with them through CLI, this is my first attempt to build a user interface for them. Playing with it feels really great, it feels like the model is actually usable. https://github.com/eri24816/midi-gen The user interface has a piano roll where you can make music. When needed, you can ask AI to generate a bar for you. It will offer multiple options for your choice, which can be further edited. Occasionally, there emerges music that sounds amazingly good but you didn’t think of. I believe that kind of “accident” is the main value of this AI in music composition.